-

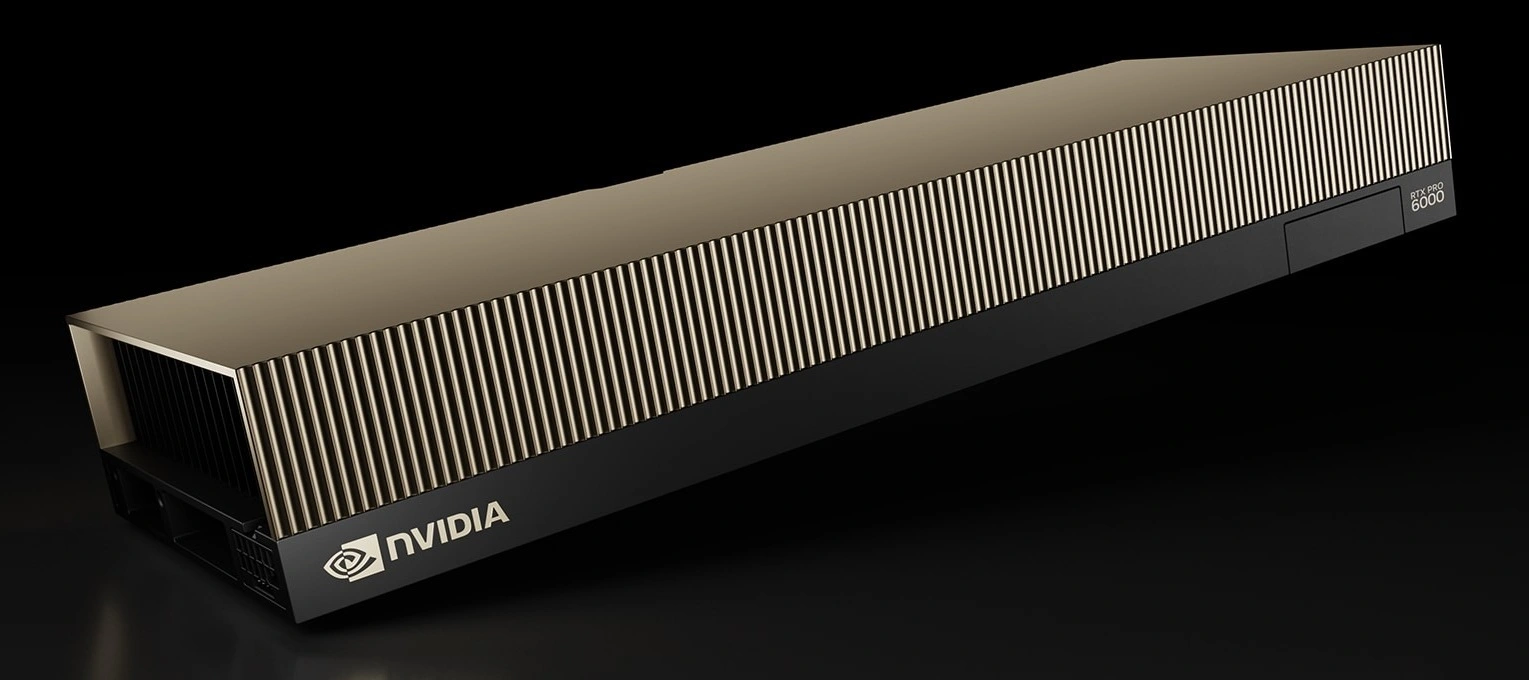

How to monitor GPU-specific threats and anomalous usage

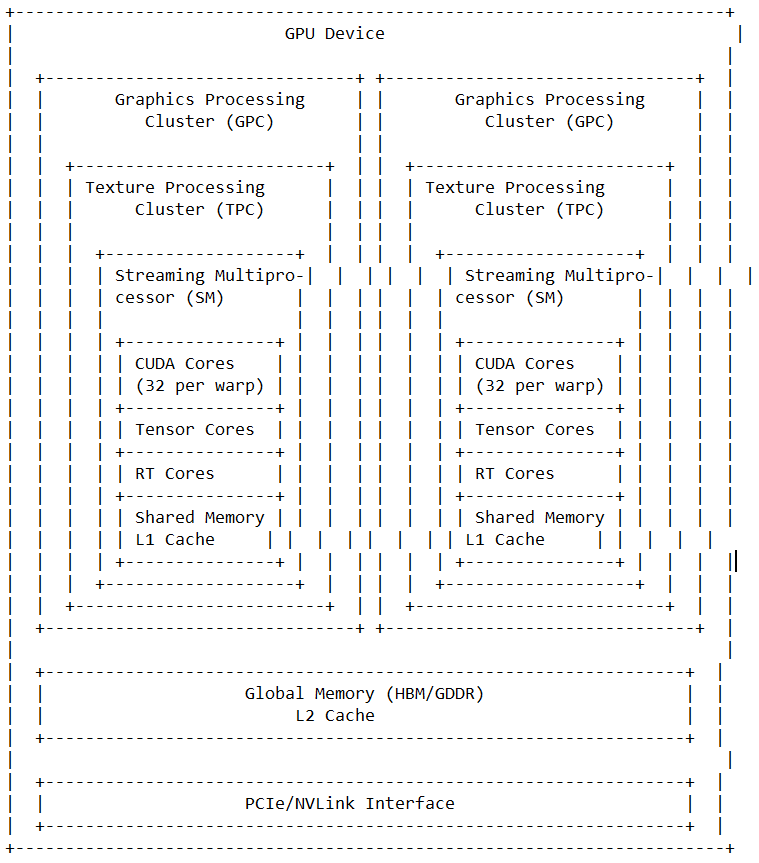

Monitoring GPU-specific threats and anomalous usage is critical for protecting server resources, sensitive data, and AI models. Here’s how to do it effectively, explained in a practical tone. Use Real-Time GPU Metrics and Alerts Install GPU-aware monitoring tools such as NVIDIA DCGM (Data Center GPU Manager), Prometheus with GPU exporters, or custom scripts leveraging nvidia-smi. These…