TensorFlow GPU Servers: Unleash the True Power of Machine Learning

- Lightning-Fast Model Training

- Enterprise-Grade Infrastructure

- Guaranteed Resources

- Scalable & Cost-Effective

TOC

TOC

Price Plans TensorFlow GPU Servers

| GPU | Specs | Region | Price | Purchase |

|---|---|---|---|---|

|

| $900 Monthly | Buy now | |

2x A100 80GB |

| $2300 Monthly | Buy now | |

2x RTX 6000 Ada |

| $1500 Monthly | Buy now | |

2x A40 |

| $1500 Monthly | Buy now | |

2x RTX A6000 |

| $1500 Monthly | Buy now | |

2x RTX 4000 Ada |

| $450 Monthly | Buy now | |

|

| $370 Monthly | Buy now |

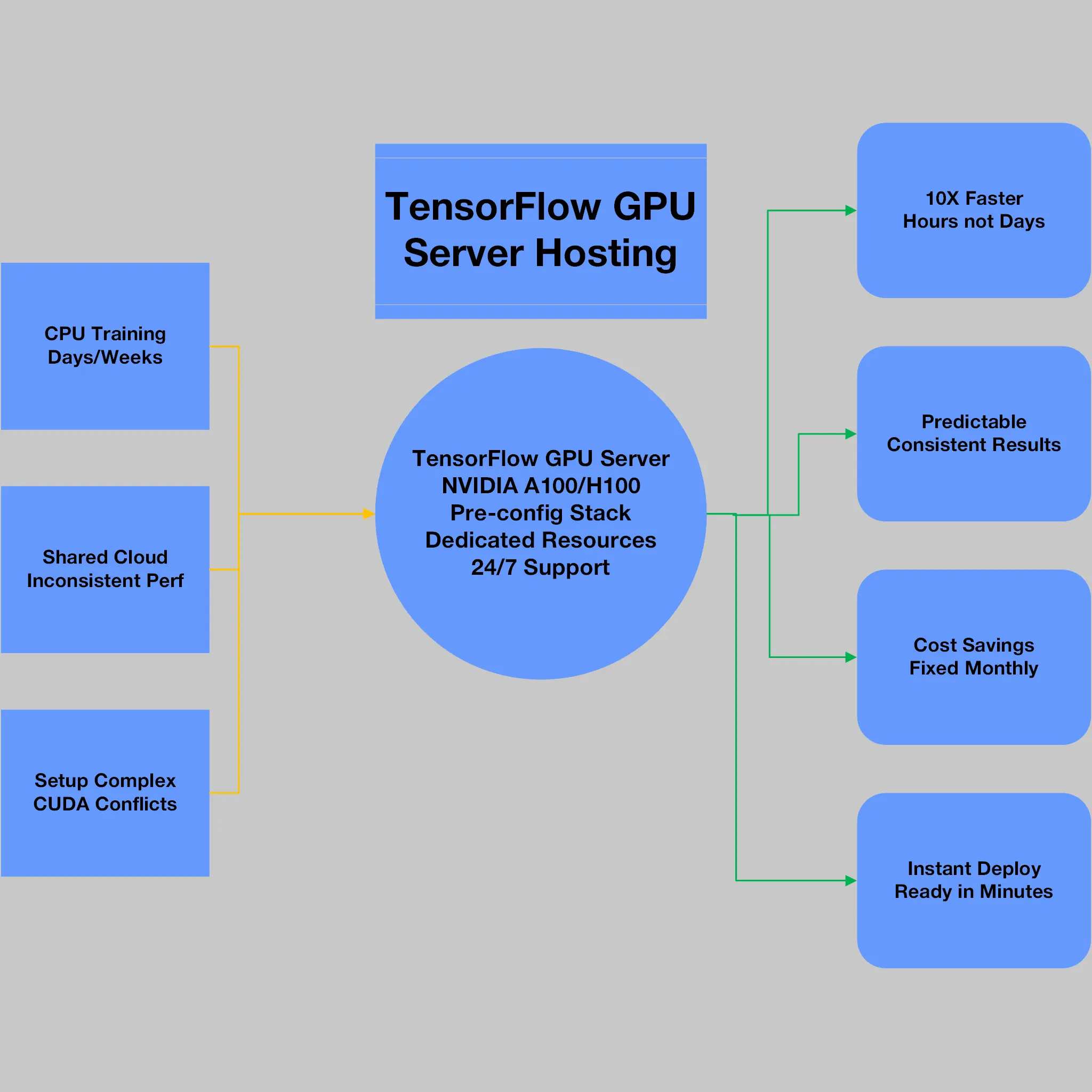

Why Your Next AI Breakthrough Depends on GPU Acceleration

Picture this: you’ve spent weeks crafting the perfect neural network architecture, your dataset is pristine, and your hypothesis is sound. You hit “train” and… wait. Hours turn into days, days into weeks.

Sound familiar? Every data scientist has been there. The difference between rapid iteration and endless waiting often comes down to one thing: GPU acceleration.

TensorFlow GPU servers aren’t just faster hardware, they’re your competitive advantage in the AI race. While your competitors train on CPUs or fight for shared cloud resources, you’re completing workloads in minutes rather than hours, testing new architectures daily instead of monthly, and shipping models while others are still debugging their first prototype.

Salient Features of TensorFlow GPU Servers

Optimized TensorFlow Stack

Pre-installed TensorFlow 2.x with CUDA 12.x, cuDNN, and TensorRT libraries perfectly matched for maximum GPU performance and zero compatibility issues.

High-Memory NVIDIA GPUs

Choose from RTX 6000 Pro Blackwell (97GB), A100 (80GB), or H100 (80GB) cards with massive VRAM capacity for training large language models and complex computer vision networks.

Multi-GPU Scaling

Seamless TensorFlow distributed training across 2, 4, or 8 GPUs with NVLink interconnects for linear performance scaling and faster convergence.

NVMe Storage Performance

Lightning-fast NVMe SSDs eliminate data loading bottlenecks, keeping GPUs saturated during training with rapid dataset access and model checkpointing.

Remote Development Environment

Pre-configured JupyterLab, VS Code Server, and SSH access for secure remote coding, real-time collaboration, and seamless model deployment workflows.

Enterprise Security & Monitoring

Hardware-level isolation, encrypted storage, VPN integration, plus comprehensive GPU monitoring with nvidia-smi, TensorBoard, and Grafana dashboards.

Why computeman TensorFlow Servers?

Slash Time-to-Market by 90%

Transform weeks of model training into hours with dedicated NVIDIA GPUs, letting you iterate faster, test more architectures, and ship AI solutions while competitors are still waiting for their first epoch to complete.

Predictable Costs, Unlimited Potential

Fixed monthly pricing eliminates surprise cloud bills and preemption risks. Run 24/7 training jobs, hyperparameter sweeps, and inference workloads without worrying about hourly charges or sudden shutdowns.

Zero Setup Frustration

Skip the nightmare of CUDA installation, version conflicts, and driver issues. Your GPU server arrives with perfectly tuned TensorFlow environments, JupyterLab, and all dependencies ready to run your first model in minutes.

Own Your AI Competitive Edge

While others share cloud resources and face performance throttling, you control dedicated hardware that delivers consistent speeds, protects proprietary datasets, and scales seamlessly as your AI ambitions grow.

Frequently Asked Questions

How much faster will my TensorFlow models train on a GPU server?

Most customers see 5-20× speed improvements over CPU training, depending on model architecture. Complex neural networks like transformers or convolutional networks benefit the most. Simple linear models might only see 2-3× gains, but deep learning workloads regularly hit double-digit acceleration.

What happens if I run out of GPU memory during training?

You’ll get a clear error message, and there are several solutions: reduce batch size, enable mixed precision training (often doubles your effective memory), or upgrade to a higher-memory GPU. We’re happy to help you right-size your hardware based on your specific models.

How do I get my data onto the server?

Several options: secure file transfer (SFTP), direct upload through JupyterLab, mounting cloud storage buckets, or even shipping drives for massive datasets. Most customers start by uploading a sample dataset to test everything, then migrate their full data pipeline.

What if my model is too large for a single GPU?

TensorFlow’s distributed training automatically spreads large models across multiple GPUs. We offer multi-GPU servers, or you can use model parallelism techniques. For truly massive models, we can set up multi-server clusters that work together seamlessly

Do I need to know CUDA programming to use these servers?

Not at all. TensorFlow handles all the GPU programming automatically. Just write normal Python code and TensorFlow optimizes everything behind the scenes. Our servers come pre-configured with all the drivers and libraries, so you can start training immediately without any GPU expertise.

Can multiple team members use the same GPU server?

Yes! You can create separate user accounts, run multiple Jupyter sessions, or use containerization to isolate different projects. Just remember that GPUs work best when focused on one intensive task at a time. Sharing works great for development but not simultaneous heavy training.

Is my code and data secure on a dedicated GPU server?

Absolutely. Unlike shared cloud instances, you’re the only tenant on the physical hardware. Add encrypted storage, VPN access, and firewall rules, and your intellectual property stays completely private. Perfect for proprietary datasets or competitive research.

How quickly can I get started?

Most servers deploy within 15-30 minutes. You’ll receive login credentials and can immediately access JupyterLab or SSH to start uploading code. The longest part is usually transferring your dataset. Everything else is ready to go from day one.

The Science Behind Speed: How TensorFlow Exploits GPU Architecture

Parallel Processing Powerhouse

Modern GPUs pack thousands of cores compared to a CPU’s handful. Where a high-end processor might have 16-32 cores, an NVIDIA H100 delivers 16,896 CUDA cores plus 528 specialized Tensor Cores. This massive parallelism transforms how TensorFlow handles the mathematical foundation of deep learning:

- Matrix multiplications (the backbone of neural networks) execute across hundreds of cores simultaneously.

- Element-wise operations like ReLU activations process entire tensors in parallel.

- Gradient computations distribute across the GPU’s memory bandwidth of up to 3.35 TB/s.

The CUDA Advantage

TensorFlow’s tight integration with NVIDIA’s CUDA ecosystem means your Python code automatically benefits from years of GPU optimization. Functions like tf.matmul and tf.nn.conv2d instantly drop into hand-tuned kernels that squeeze every FLOP from the silicon. Enable mixed precision with a single line—tf.keras.mixed_precision.set_global_policy('mixed_float16')—and watch throughput jump 2-3× while memory usage plummets.

Dedicated GPU Servers vs. Cloud: The Economics of Performance

Why Single-Tenant Beats Shared Every Time

Cloud providers love to talk about “infinite scalability,” but here’s what they don’t mention: noisy neighbors. When multiple users share the same physical GPU through virtualization, performance becomes unpredictable. Your training job might complete in 2 hours today, 6 hours tomorrow, depending on who else is using the box.

Dedicated TensorFlow GPU servers eliminate this variability entirely. Every CUDA core, every gigabyte of VRAM, every PCIe lane belongs to you. The result? Consistent performance that lets you accurately estimate project timelines and optimize hyperparameters with confidence.

Compute: Why Tensor Cores Change Everything

Not all GPU cores are equal. NVIDIA’s Tensor Cores are purpose-built for the mixed-precision math that dominates modern deep learning. A single H100 Tensor Core can execute matrix operations at 989 TOPS INT8 precision that’s nearly 1,000 trillion operations per second.

TensorFlow automatically detects and leverages these specialized units when you enable automatic mixed precision. The performance gains are dramatic: 2-4× speedup on transformer architectures, 1.5-2× on convolutional networks.

Software Stack: From Zero to Training in Minutes

Pre-Configured Environments

The biggest obstacle to GPU adoption isn’t hardware—it’s software configuration. CUDA versions must match TensorFlow builds, cuDNN libraries need exact compatibility, and driver conflicts can waste days of setup time.

Our TensorFlow GPU servers ship with battle-tested software stacks:

- Ubuntu 24.04 LTS with hardened kernel and security updates

- NVIDIA Driver 545.x matching the latest CUDA 12.3 toolkit

- cuDNN 8.9 and TensorRT 8.6 for maximum performance

- TensorFlow 2.14+ compiled with full GPU optimizations

- JupyterLab and VS Code Server for remote development

- Docker with NVIDIA Container Toolkit for containerized workflows

Container-Ready Deployment

Modern ML workflows live in containers. Our servers include the NVIDIA Container Runtime, so you can docker run --gpus all tensorflow/tensorflow:latest-gpu and immediately access GPU acceleration. Mount your code, attach your datasets, and start training with no dependency hunting or version conflicts.

Testimonials

“Switching to a dedicated TensorFlow GPU server was a game-changer for our startup. What used to take 3 days of training now finishes in 4 hours. No more babysitting cloud instances that randomly shut down mid-execution, no more surprise bills when we needed extra compute. Our team can finally iterate at the speed of our ideas. We’ve shipped more ML features in the last quarter than in the entire previous year. Worth every penny.”

Blanka Kalivas. Engineer, Thinking Gears.