TOC

TOC

A graphics processing unit (GPU) is a massively parallel processor designed to execute thousands of lightweight threads concurrently. Originally built to rasterize and shade pixels for 3D graphics, modern GPUs accelerate a wide range of data-parallel workloads, from deep learning and data analytics to scientific computing, by combining high-throughput compute units, wide memory bandwidth, and specialized tensor/matrix engines.

Since 2008, GPU has been used for computationally demanding applications. The architecture and programming model of GPU is significantly different from single-chip CPU. The GPU is designed for specific class of applications which have been successfully ported to the GPU. Some of the common class of application that can take advantage of the parallel aerchitecture of GPU are listed below.

- Compute-intensive applications

- Significant parallelism in application

- Throughput is more important than latency

When dedicated servers use CPUs (Central Processing Units) or FPGAs (Field-Programmable Gate Arrays), scaling up performance usually means adding more and more cores. This can quickly make things much more expensive.

These days, GPUs (Graphics Processing Units) aren’t just found in graphics cards, they’re everywhere, powering cutting-edge artificial intelligence (AI) and deep learning applications. As GPUs have evolved, they’re now used for far more than just graphics, speeding up tasks like encryption and data analysis through a technology called GPGPU (General-Purpose computing on GPU).

To keep up with the ever-growing needs of modern computing, multi-GPU systems have become a game-changer. By combining several GPUs in one system, organizations can get much more performance than a single GPU could provide alone. But as these setups get bigger and more complicated, one of the real challenges is managing memory effectively among all those GPUs.

So, what is a GPU, and why is it so important? Simply put, a GPU is a special kind of electronic circuit designed to do lots of mathematical calculations very quickly. It’s built to handle repetitive calculations like those needed for rendering graphics, training AI models, or editing videos by performing the same operation on large chunks of data at once. This makes GPUs fantastic for jobs where lots of similar tasks need to be done simultaneously.

Originally, GPUs were designed to take the heavy lifting of graphics off the CPU, allowing computers to handle more complex visuals and freeing up the CPU for other work. Back in the late 1980s, computer makers started using basic graphics accelerators to help with things like drawing windows and text, which made computers faster and more responsive. By the early 1990s, advances like SVGA (Super Video Graphics Array) let computers show better resolution and more colors—just what the fast-growing video game industry needed to push the envelope on 3D graphics.

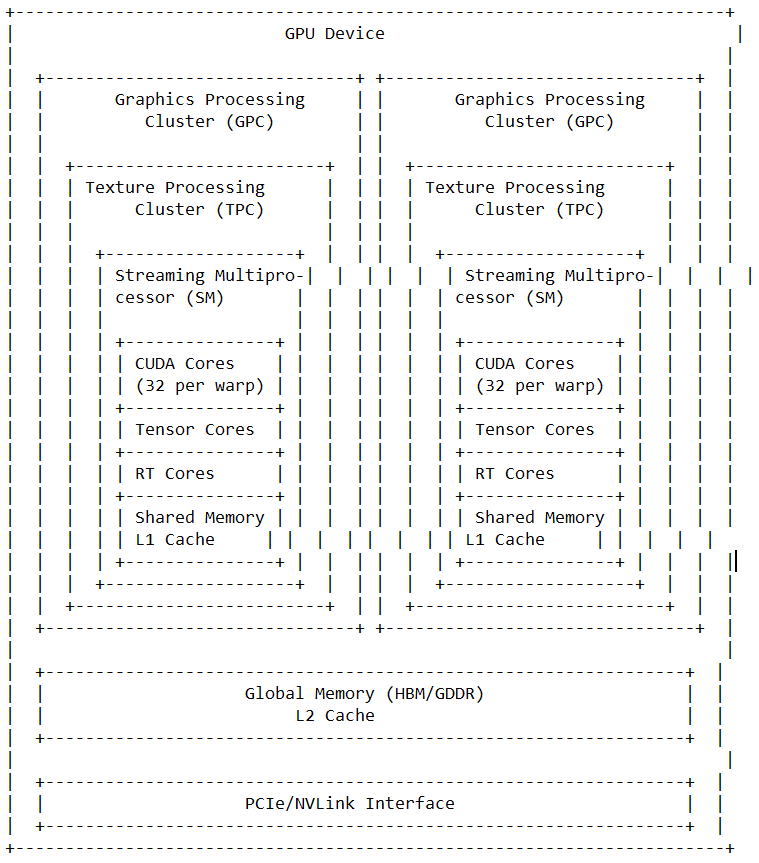

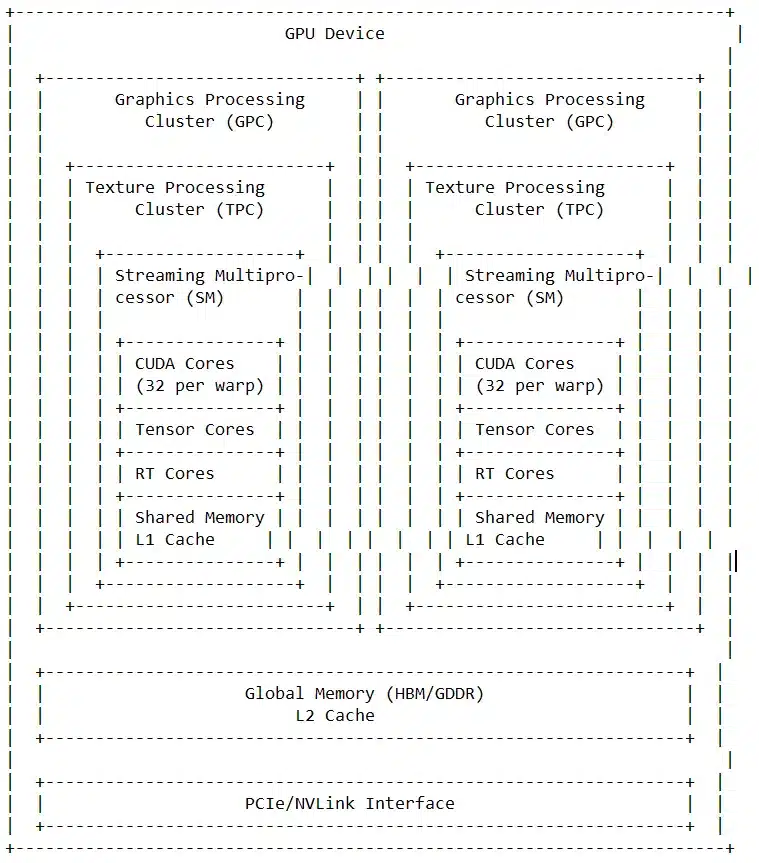

Key Architectural Concepts

A contemporary data center GPU integrates:

- Many-core streaming multiprocessors (SMs) or compute units (CUs) that execute SIMT/SIMD-style threads for high occupancy and latency hiding.

- Specialized tensor/matrix engines (e.g., NVIDIA Tensor Cores; AMD matrix cores in CDNA) that accelerate GEMM and transformer-style operations with FP16/FP8/mixed precision arithmetic.

- A deep memory hierarchy: large on-package HBM stacks delivering multi-terabyte/s bandwidth; sizable L2/Infinity Cache; software-managed shared memory close to compute; and PCIe/NVLink/Infinity Fabric interconnects for multi-GPU scaling.

- Hardware and software features for secure multi-tenancy and partitioning (MIG-like partitioning, spatial sharing, and PTX-level bounds checking research).

These components enable GPUs to keep arithmetic units saturated by overlapping computation with data movement using asynchronous pipelines and by exposing locality at multiple granularities (threads, thread blocks, clusters).

Why GPUs Excel at Parallel Workloads

- Throughput orientation: thousands of threads hide long-latency memory operations by context switching without heavy scheduling overhead.

- Wide memory bandwidth: HBM3/HBM3E delivers 1–5+ TB/s, crucial for bandwidth-bound kernels and large models.

- Mixed precision and transformer engines: FP8/FP16 paths deliver 9×+ training speedups and large inference gains versus prior generations, especially on transformer workloads.

- Scalable interconnects: NVLink/NVSwitch and Infinity Fabric enable model/data parallelism across dozens to hundreds of GPUs.

Workloads Beyond Graphics

- Deep learning training/inference: transformer models dominate, benefiting from tensor engines, FP8, and large HBM capacity.

- Graph analytics and irregular workloads: architectural differences influence algorithmic efficiency; some traversal-heavy kernels can favor AMD-like warps and shared memory organization.

- Scientific/HPC: DP/DPX instructions and large caches accelerate genomics, sparse linear algebra, and stencil codes.

- Data analytics and databases: KNN and other primitives are frequently optimized to exploit GPU parallelism.

Nuanced Performance Realities

Specialized tensor cores shine on compute-bound dense GEMMs, but do not magically fix bandwidth ceilings. A 2025 analysis found that for memory-bound kernels (e.g., SpMV, some stencils), tensor cores offer limited theoretical speedup (≤1.33× DP) and often underperform well-optimized CUDA-core paths in practice. This underscores the importance of roofline-style thinking: match the kernel’s arithmetic intensity to the right units and optimize memory access patterns.

Multi-Tenancy, Sharing, and Scheduling

Large clusters increasingly share GPUs across jobs. Recent schedulers demonstrate that judicious, non-preemptive sharing with gradient accumulation can reduce average completion time by 27–33% versus state-of-the-art preemptive schedulers, while preserving convergence. At the safety layer, compiler-level bounds checking (e.g., Guardian) improves memory isolation for spatially shared GPUs.

Memory Capacity and Bandwidth Trends

- HBM3E progress: industry now ships 8‑high and 12‑high stacks; 12‑high 36 GB packages surpass 1.2 TB/s per stack while improving power efficiency directly enabling larger LLMs per accelerator and reducing off-chip traffic.

- Vendor validations and thermal/power challenges continue; 8‑high parts have cleared critical qualification gates for leading AI processors, with 12‑high devices advancing through testing.

Representative Architectures in 2025

- NVIDIA Hopper (H100): 80B transistors, FP8 transformer engine, thread-block clusters, Tensor Memory Accelerator, secure MIG, and NVLink-scale interconnects gives up to 30× inference speedups on large language models versus A100 depending on workload.

- AMD CDNA 3 (MI300X): chiplet design with 304 CUs, 192 GB HBM3 and 5.3 TB/s bandwidth, 256 MB Infinity Cache, and 4 TB/s on-package fabric for coherent CPU/GPU memory sharing in the MI300A APU variant.

Choosing and Using a GPU

Selection hinges on:

- Model size and precision (FP8/FP16 memory footprint).

- Bandwidth needs (HBM3E capacity and TB/s).

- Scale-out requirements (NVLink/Infinity Fabric topology).

- Multi-tenancy features and isolation needs (MIG/Guardian-like bounds checking).

- Kernel mix (compute-bound vs. memory-bound), as tensor cores may not help bandwidth-limited phases.

Profiling remains essential: measure utilization, memory BW, cache hit rates, and overlap of copy/compute to expose bottlenecks.

Takeaways

GPUs are heterogeneous, throughput-first processors whose effectiveness stems from smart co-design of compute, memory, and interconnects. 2025 architectures extend performance via FP8 transformer engines, larger HBM stacks, and new programmability features, while research highlights the limits of tensor cores on bandwidth-bound kernels and advances safer multi-tenant sharing. Optimal results still require workload-characterization, memory-aware optimization, and isolation-conscious scheduling.

- J. D. Owens, M. Houston, D. Luebke, S. Green, J. E. Stone and J. C. Phillips, “GPU Computing,” in Proceedings of the IEEE, vol. 96, no. 5, pp. 879-899, May 2008, doi: 10.1109/JPROC.2008.917757.

keywords: {Graphics;Central Processing Unit;Physics computing;Engines;Arithmetic;Bandwidth;Microprocessors;Hardware;Computational biophysics;Performance gain;General-purpose computing on the graphics processing unit (GPGPU);GPU computing;parallel computing}, ↩︎ - JaeSeok Lee, DongCheon Kim, Seog Chung Seo,

Parallel implementation of GCM on GPUs,

ICT Express,

Volume 11, Issue 2,

2025,

Pages 310-316,

ISSN 2405-9595,

https://doi.org/10.1016/j.icte.2025.01.006

(https://www.sciencedirect.com/science/article/pii/S2405959525000062) ↩︎ - Kim, D., Choi, H. and Seo, S.C., 2024. Parallel Implementation of SPHINCS $+ $ With GPUs. IEEE Transactions on Circuits and Systems I: Regular Papers. ↩︎

- Yujuan Tan, Zhuoxin Bai, Duo Liu, Zhaoyang Zeng, Yan Gan, Ao Ren, Xianzhang Chen, Kan Zhong,

BGS: Accelerate GNN training on multiple GPUs,

Journal of Systems Architecture,

Volume 153,

2024,

103162,

ISSN 1383-7621,

https://doi.org/10.1016/j.sysarc.2024.103162

(https://www.sciencedirect.com/science/article/pii/S1383762124000997) ↩︎ - Javier Prades, Carlos Reaño, Federico Silla,

NGS: A network GPGPU system for orchestrating remote and virtual accelerators,

Journal of Systems Architecture,

Volume 151,

2024,

103138,

ISSN 1383-7621,

https://doi.org/10.1016/j.sysarc.2024.103138

(https://www.sciencedirect.com/science/article/pii/S1383762124000754) ↩︎