TOC

TOC

The 2025 product cycle delivered the largest leap in data-center GPU capability since the original Hopper launch. Vendors raced to pair ever-faster tensor engines with next-generation HBM3E stacks, PCIe Gen 5, and rack-scale fabrics—enabling trillion-parameter models to train or serve from a single rack.

This article reviews the four flagship accelerators that defined the year, compares their memory innovations, and explains what they mean for real-world AI deployments.

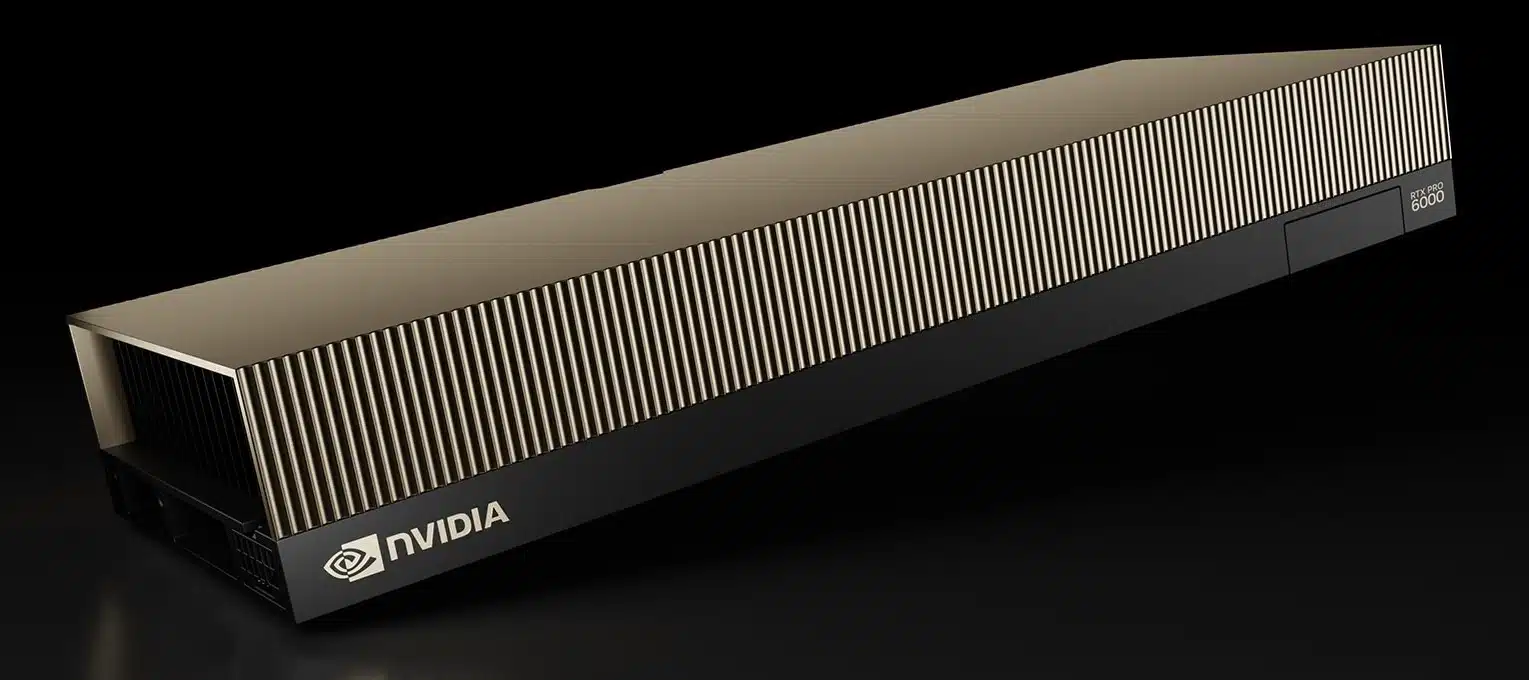

NVIDIA Blackwell B200/B100

Architecture highlights

- Multi-die “super-chip.” Two 4-nm dies share a 10-TB/s on-package fabric, presenting one massive accelerator to software.

- FP4 + FP8 Transformer Engine 2.0. Mixed-precision support slashes inference energy by up to 25 × versus H100 while sustaining >1 PFLOP mixed precision per GB200 super-chip.

- NVLink-5 (1.8 TB/s per GPU). 576 GPUs can appear as one memory-coherent domain—vital for 10-trillion-parameter LLMs.

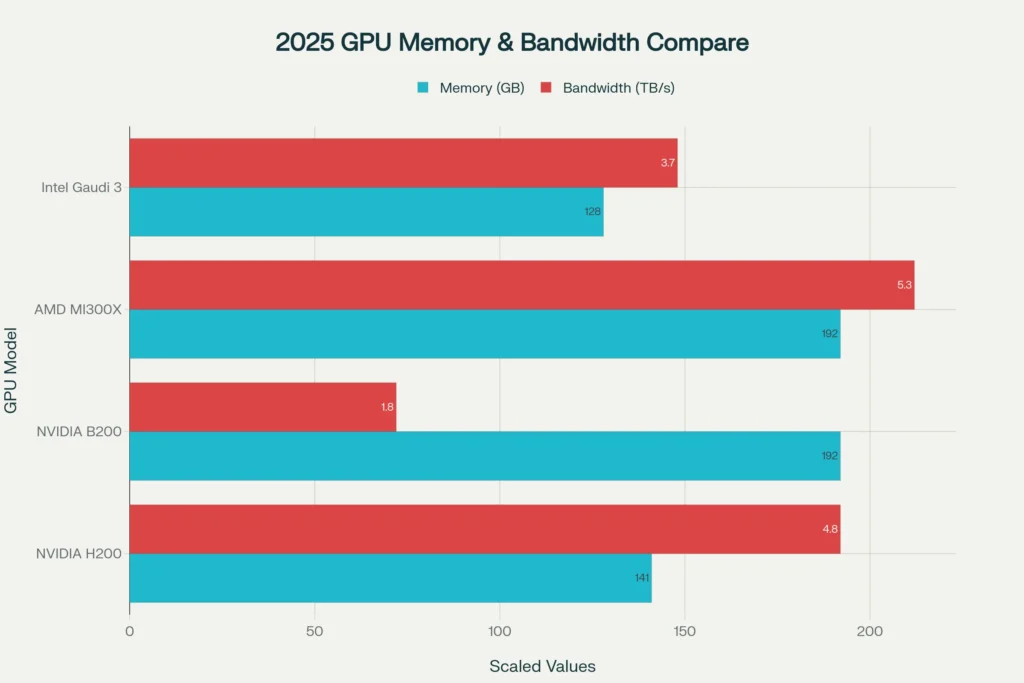

- HBM3E 192 GB option. Early disclosures indicate 8 × 24 GB or 12 × 16 GB stacks, offering ~1.8 TB/s bandwidth and 50% more capacity than H200.

Performance claims

NVIDIA positions a GB200 NVL72 rack (72 Blackwell GPUs + 36 Grace CPUs) at 30 × H100 inference throughput while using 25 × less energy on popular LLMs. Cloud providers report “sold-out” 2025 allocations, underscoring pent-up demand.

Buy NVIDIA GPU Dedicated Server. Deploy in Minutes.

Stop renting power. Buy a GPU dedicated server and control cost, performance, and security—instant provisioning, SLA uptime, and expert support included.

NVIDIA H200 (Hopper Refresh)

- World’s first HBM3E GPU—141 GB at 4.8 TB/s, 1.4 × the bandwidth of H100.

- Same FP8/FP16 compute as H100 (≈ 67 TFLOP FP32), but far higher model capacity—ideal for memory-bound generative-AI inference.

- Drop-in upgrade for existing HGX H100 racks; minimal requalification needed.

AMD Instinct MI300X / MI325X

- 192 GB HBM3 (MI300X) and 256 GB HBM3E (MI325X)—the largest on-package memories shipping today.

- 5.3 TB/s bandwidth (MI300X) and up to 8 TB/s on MI350-series prototypes with Micron 12-high stacks.

- CDNA 3 chiplets + Infinity Fabric. 304 compute units, > 380 GB/s bidirectional GPU–GPU links, optional CPU/GPU APU variant (MI300A) for tightly coupled HPC workloads.

- MLPerf v5.1 shows MI300X/MI325X matching or beating H100 in several LLM inference tests while using fewer GPUs, thanks to huge on-chip memory.

Intel Gaudi 3

- 128 GB HBM2e, 3.7 TB/s bandwidth—1.5 × the Gaudi 2 bandwidth and 2 × its BF16 compute.

- Open-Ethernet fabric (24 × 200 GbE). Avoids proprietary NVLink-style switches; scales to 1000-node clusters over standard RoCE.

- PCIe Gen 5 add-in cards (600 W TDP) let enterprises trial Gaudi 3 in existing servers without OAM backplanes.

- Intel claims 1.8 × better $/perf on inference than H100—positioning Gaudi 3 as a cost-efficient alternative for mid-scale AI clouds.

Comparative Memory Landscape

The move from 80 GB (H100) to 192-256 GB per GPU fundamentally changes cluster design. Larger models now fit inside one device, eliminating tensor-parallel sharding and inter-GPU latency.

Why 2025 Matters

- HBM3E maturity. Micron and Samsung began shipping 12-high, 36 GB stacks > 1.2 TB/s, enabling 288 GB GPUs (future MI355X) and 30 TB racks.

- Rack-scale packaging. NVIDIA’s NVL72 and AMD’s 8-way OAM trays ship as turnkey AI factories.

- Mixed-precision evolution. FP4 (Blackwell) and BF8 (Gaudi 3) push efficiency beyond FP8 while preserving accuracy for LLMs.

- Cost diversification. AMD and Intel now offer memory-rich or price-efficient alternatives, preventing a single-vendor lock-in at hyperscale.

Choosing a 2025 Accelerator

| Decision Factor | Best Fit |

|---|---|

| Maximum memory per GPU | AMD MI325X / MI350 series |

| Lowest power per trillion tokens | NVIDIA Blackwell B200 |

| Drop-in upgrade for Hopper racks | NVIDIA H200 |

| NVIDIA H200 | Intel Gaudi 3 |

Outlook

With a one-year cadence, vendors will likely unveil MI355X (CDNA 4 + 288 GB HBM3E) and Blackwell Refresh by late 2026, while Intel eyes Falcon Shores for GPU-CPU xPU convergence. For AI architects planning 2026 clusters, the 2025 class offers a staging ground: test larger FP8/FP4 models on memory-rich cards today, build software stacks for Ethernet or NVLink fabrics, and gather real TCO data before the next generational jump.

The 2025 data-center GPU lineup is the most diverse in a decade, giving enterprises unprecedented freedom to balance performance, memory, network topology, and cost. Whether you need to serve trillion-token chatbots or fine-tune open-source LLMs on-prem, there is now an accelerator tailored to your scale—and the race to ever larger models shows no sign of slowing.