GPU Accelerated Scientific Computing: the Power of Parallel Processing

- Massive Performance Gains

- Cost-Effective Scaling

- Accelerated Research Cycles

- Future-Proof Scientific Infrastructure

TOC

TOC

Price Plans GPU Servers for Scientific Computing

| 2x A100 80GB GPU Server |

|---|

| $2300 monthly |

| 2x Xeon Gold 6336Y |

| 256 GB RAM |

| 1 TB SSD |

| Unlimited data |

| 1 Public IPv4 |

| DC Amsterdam, Netherlands |

| Buy A100 80GB GPU Server |

| 2x NVIDIA RTX 6000 Ada Lovelace GPU Server |

|---|

| $1500 monthly |

| 1x Xeon Silver 4410T |

| 128 GB RAM |

| 1 TB SSD |

| Unlimited data |

| 1 Public IPv4 |

| DC Amsterdam, Netherlands |

| Buy RTX 6000 Ada Lovelace GPU Server |

| 2x NVIDIA A40 GPU Server |

|---|

| $1500 monthly |

| 2x Xeon Gold 6326 |

| 128 GB RAM |

| 1 TB SSD |

| Unlimited data |

| 1 Public IPv4 |

| DC Amsterdam, Netherlands |

| Buy A40 GPU Server |

| 1x RTX A4000 GPU Server |

|---|

| $370 monthly |

| 1x Xeon Silver 4114 |

| 64 GB RAM |

| 1 TB SSD |

| Unlimited data |

| 1 Public IPv4 |

| DC Amsterdam, Netherlands |

| Buy RTX A4000 GPU Server |

| 2x NVIDIA RTX 4000 Ada Lovelace GPU Server |

|---|

| $450 monthly |

| 1x Xeon Silver 4410T |

| 128 GB RAM |

| 1 TB SSD |

| Unlimited data |

| 1 Public IPv4 |

| DC Amsterdam, Netherlands |

| Buy 2x RTX 4000 Ada Lovelace GPU Server |

| 2x NVIDIA A6000 GPU Server |

|---|

| $1500 monthly |

| 1x Xeon Gold 6226R |

| 256 GB RAM |

| 2 TB SSD |

| Unlimited data |

| 1 Public IPv4 |

| DC Amsterdam, Netherlands |

| Buy A6000 GPU Server |

| 1x RTX 6000 Blackwell GPU Server |

|---|

| $900 monthly |

| 1x Xeon Silver 4114 |

| 128 GB RAM |

| 1 TB SSD |

| Unlimited data |

| 1 Public IPv4 |

| DC Amsterdam, Netherlands |

| Buy RTX 6000 Pro Blackwell GPU Server |

The Computational Revolution in Life Sciences

The life sciences landscape has fundamentally transformed over the past decade. What once took months of computational analysis now completes in hours. Molecular dynamics simulations that required supercomputer clusters now run on single workstations.

Drug discovery pipelines that cost millions and spanned years now accelerate to weeks with predictive accuracy that seemed impossible just a few years ago.

This revolution isn’t driven by incremental improvements—it’s powered by Graphics Processing Units (GPUs) that have redefined what’s computationally possible in biological research.

From decoding the human genome to designing life-saving therapeutics, GPU acceleration has become the backbone of modern computational biology, enabling discoveries that directly impact human health and scientific understanding.

The numbers tell a compelling story: researchers report speedups of 50-700× over traditional CPU-based methods, cost reductions of up to 90%, and the ability to process datasets that were previously impossible to analyze. GPU servers are revolutionizing life sciences research and have become essential infrastructure for any serious computational biology initiative.

Salient Features of Computeman Scientific Computing

Modern GPU architectures include features specifically valuable for life sciences.

High-Bandwidth Memory (HBM)

Delivers 2-3 TB/s memory throughput for processing massive scientific datasets like genomic sequences, protein structures, and simulation results without data bottlenecks that cripple traditional systems.

Tensor Cores

Accelerate AI/ML workloads critical for protein folding prediction and drug discovery. Tensor cores represent a specialized breakthrough in GPU architecture specifically designed for the matrix-heavy computations that dominate modern AI-driven life sciences.

Double-Precision Support

Ensures numerical accuracy for sensitive molecular calculations. Essential for molecular dynamics, quantum chemistry, and climate modeling where numerical accuracy directly impacts scientific validity—prevents computational errors that can invalidate months of research.

NVMe Storage Performance

NVMe drives deliver 3,000-7,000 MB/s sequential throughput—up to 12× faster than SATA storage. For scientific applications, this translates directly into large trajectory files load in seconds instead of minutes, enabling interactive analysis and real-time visualization. Simulation restarts from saved states in seconds rather than minutes, minimizing downtime from system maintenance or unexpected interruptions

NVLink Interconnects

Enable multi-GPU scaling for massive simulations and high-memory throughput. While traditional PCIe connections between GPUs max out at 32 GB/s bidirectional bandwidth, NVLink delivers up to 900 GB/s of inter-GPU communication, enabling true multi-GPU scaling for computationally intensive workloads. Large biomolecular systems distribute across multiple GPUs connected by NVLink, with atoms and forces calculated in parallel while maintaining perfect synchronization.

Cost Transformation

A single GPU server often replaces 20-50 CPU servers for parallel workloads, reducing hardware, power, and cooling costs by 70-90%. Scientists spend time interpreting results rather than waiting for computations, increasing research productivity by 3-10×. Faster computational cycles enable rapid iteration and earlier discovery of promising research directions. GPU acceleration reduces cloud computing costs by 50-80% through dramatic runtime reductions.

Computational Biology and Systems Analysis

Beyond specific applications, GPUs accelerate fundamental computational biology methods:

Phylogenetic Analysis

Construct evolutionary trees from genomic data using maximum likelihood and Bayesian methods. GPU acceleration enables analysis of datasets with thousands of species.

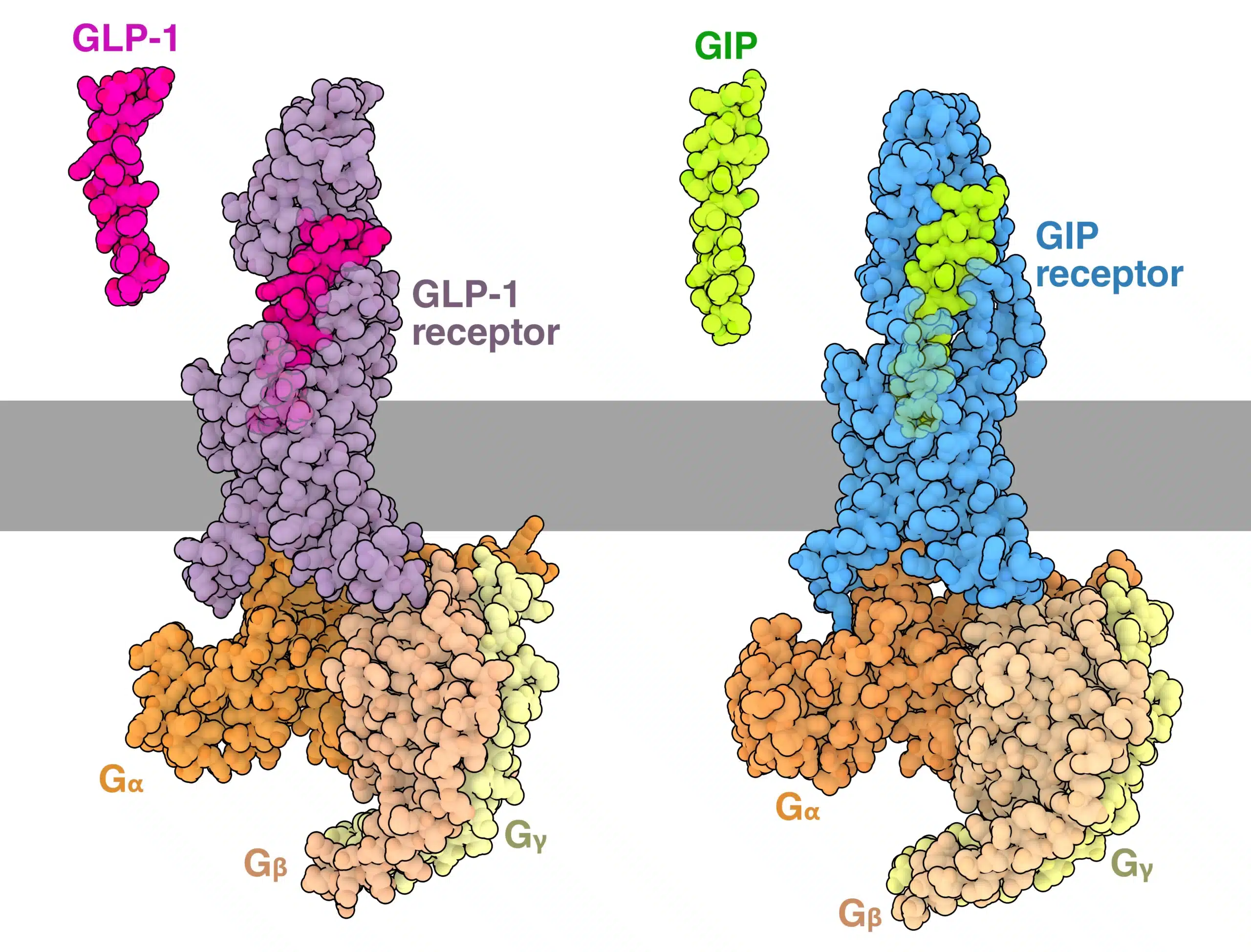

Protein Structure Prediction

AI models like AlphaFold leverage GPU training to predict protein structures from amino acid sequences. These breakthroughs required massive GPU clusters but now enable protein structure analysis for any researcher.

Systems Biology Modeling

Simulate complex biological networks including gene regulation, metabolic pathways, and cell signaling. GPU parallelization enables whole-cell models with thousands of components.

Bioinformatics Visualization

Render complex biological structures and datasets in real-time for interactive exploration. GPU-accelerated visualization enables researchers to explore molecular structures, genomic landscapes, and biological networks intuitively.

Frequently Asked Questions

Do I need special programming skills to use GPU-accelerated scientific software?

Most established scientific software packages (AMBER, GROMACS, ImageJ, R with Rapids) include GPU acceleration that works transparently—just install and run. You don’t need CUDA programming knowledge. However, custom analysis scripts may require GPU-aware libraries like CuPy or Rapids to achieve maximum performance.

What about software licensing and compatibility?

Most scientific software vendors support GPU acceleration with standard licenses. Popular packages like MATLAB, Mathematica, and specialized tools include GPU support. Open-source software (Python, R, BioConductor) works seamlessly. We can help validate software compatibility before deployment.

Do you provide support for scientific software installation and optimization?

Absolutely. Our scientific computing specialists help install, configure, and optimize research software for maximum GPU performance. We also provide ongoing support for troubleshooting, performance tuning, and scaling applications across multiple GPUs as your research grows.

Which scientific applications benefit most from GPU acceleration?

Applications with heavy parallel computation see the biggest gains: molecular dynamics (GROMACS, AMBER), genomics analysis (GATK, Parabricks), computational fluid dynamics, climate modeling, and AI/machine learning for biological research. Single-threaded or I/O-bound applications see minimal improvement.

Can I run multiple research projects on the same GPU server?

Yes, through containerization (Docker/Singularity) and job scheduling systems. However, GPUs perform best with dedicated access during intensive computations. Many teams use one GPU for interactive development while reserving others for production runs, or implement time-sharing for different research groups.

How do GPU servers compare cost-wise to CPU clusters or cloud computing?

A single GPU server often replaces 10-50 CPU nodes at 60-80% lower total cost including power, cooling, and space. For cloud users, GPU acceleration typically reduces runtime so dramatically that total compute costs drop 50-90% despite higher hourly rates. The ROI usually justifies itself within the first major research project.

How much GPU memory do I need for different scientific workloads?

Memory requirements vary significantly: small molecular systems need 8-16 GB, medium protein complexes require 24-48 GB, large biomolecular simulations demand 80+ GB, and population genomics or AI model training often needs 160+ GB across multiple GPUs. We help size systems based on your specific research needs.

How much faster are GPU servers for scientific computing compared to traditional CPU clusters?

Performance gains vary by application, but most scientific workloads see 20-200× speedups. Molecular dynamics simulations achieve 50-700× acceleration, genomics pipelines run 10-50× faster, and AI-driven drug discovery completes in hours instead of weeks. The exact speedup depends on how well your specific application leverages parallel processing.

AI-Powered Drug Discovery

Modern drug discovery increasingly depends on generative AI models that design novel molecular compounds with desired therapeutic properties. Tensor cores accelerate the training of these sophisticated neural networks, enabling pharmaceutical researchers to explore vast chemical spaces, predict molecular behavior, and identify promising drug candidates at unprecedented speed.

What once required years of wet-lab experimentation now happens in silico through tensor core-accelerated molecular generation and property prediction models.

The result is a fundamental shift in biological research: from hypothesis-limited to computation-limited discovery, where tensor cores provide the computational muscle to test thousands of hypotheses simultaneously and accelerate the path from scientific curiosity to life-saving treatments.

Transformative Applications Across Life Sciences

Molecular Dynamics and Structural Biology

Molecular dynamics (MD) simulations model how biological molecules move and interact over time—fundamental for understanding protein folding, drug binding, and enzymatic reactions. Traditional CPU-based MD limited researchers to small systems or short timescales due to computational constraints.

GPU acceleration has revolutionized this field:

- 700× speedup: Complete protein simulations achieve over 700× acceleration compared to single CPU cores.

- Larger systems: Simulate protein complexes with hundreds of thousands of atoms.

- Longer timescales: Extend simulations from nanoseconds to microseconds, capturing biologically relevant motions.

- Real-time analysis: Interactive visualization and steering of molecular simulations.

Popular MD software packages like AMBER, GROMACS, and YASARA now include GPU acceleration as standard features, delivering performance previously requiring expensive supercomputers.

Genomics and Personalized Medicine

The genomics revolution generates massive datasets requiring intensive computational analysis. A single human whole-genome sequence produces ~100GB of raw data that must be processed through complex pipelines including read alignment, variant calling, and annotation.

GPU acceleration transforms genomics workflows:

- Sequence Alignment: Map millions of DNA reads to reference genomes up to 50× faster than CPU-only approaches. Tools like BWA-MEM2 and Minimap2 now include GPU acceleration for both short and long-read sequencing.

- Variant Calling: Identify genetic differences between samples with GPU-accelerated algorithms like NVIDIA Clara Parabricks, reducing analysis time from days to hours. A complete human genome analysis completes in under 30 minutes using multi-GPU systems.

- Population Genomics: Analyze thousands of genomes simultaneously to identify disease associations and evolutionary patterns. GPU-accelerated tools enable studies with sample sizes previously impossible.

- Real-time Analysis: Process genomic data as it streams from sequencing instruments, enabling immediate clinical decision-making. The NVIDIA Clara Parabricks suite exemplifies this transformation, delivering 30-50× speedups while maintaining perfect concordance with established analysis pipelines. What once required compute clusters now runs on single GPU servers.

Drug Discovery and Pharmaceutical Research

Drug discovery represents one of GPU computing’s most impactful applications. The traditional pharmaceutical pipeline requires 10-15 years and costs $1-3 billion per approved drug. GPU acceleration attacks this challenge on multiple fronts:

- Virtual Screening: Test millions of compounds against biological targets in hours rather than months. GPU-accelerated docking algorithms evaluate molecular binding affinity with near-experimental accuracy.

- De Novo Drug Design: Generative AI models running on GPUs create novel molecular structures with desired therapeutic properties. These approaches can design entirely new drugs rather than screening existing compound libraries.

- ADMET Prediction: Artificial intelligence models predict how drugs will be absorbed, distributed, metabolized, and eliminated by the human body, identifying problems before expensive clinical trials.

- Molecular Dynamics: Simulate drug-target interactions at atomic resolution to understand binding mechanisms and optimize compound properties.

- Companies report dramatic improvements: molecular docking calculations that took weeks now complete in hours, virtual screening pipelines process 10-100× larger compound libraries, and AI-driven design cycles iterate daily instead of monthly.

Testimonials

“Our molecular dynamics simulations went from taking 3 weeks to completing in 8 hours—that’s a 63× speedup that transformed our entire research workflow. We can now test dozens of protein conformations and drug binding scenarios that were simply impossible before. The GPU server paid for itself in the first month through saved compute costs alone, and the scientific breakthroughs we’ve achieved since then are priceless.”

Dr. Sarah Chen, Computational Biology Lab, Stanford University